execo_g5k¶

Execo extension and tools for Grid5000.

To use execo_g5k, passwordless, public-key based authentification must be used (either with appropriate public / private specific g5k keys shared on all your home directories, or with an appropriate ssh-agent forwarding configuration).

The code can be run from a frontend, from a computer outside of Grid5000 (for this you need an appropriate configuration, see section Running from outside Grid5000) or from g5k nodes (in this case, you need to explicitely refer to the local Grid5000 site / frontend by name, and the node running the code needs to be able to connect to all involved frontends)

OAR functions¶

OarSubmission¶

-

class

execo_g5k.oar.OarSubmission(resources=None, walltime=None, job_type=None, sql_properties=None, queue=None, reservation_date=None, directory=None, project=None, name=None, additional_options=None, command=None)¶ An oar submission.

POD style class.

members are:

- resources: Set the requested resources for the job. Oar option -l, without the walltime. Can be a single resource specification or an iterable of resource specifications.

- walltime: Job walltime. Walltime part of oar -l option.

- job_type: Job type, oar option -t: deploy, besteffort, cosystem, checkpoint, timesharing, allow_classic_ssh. Can be a single job type or an iterable of job types.

- sql_properties: constraints to properties for the job, oar option -p (use single quotes for literal strings).

- queue: the queue to submit the job to. Oar option -q.

- reservation_date: Request that the job starts at a specified time. Oar option -r.

- directory: Specify the directory where to launch the command (default is current directory). Oar option -d.

- project: pecify a name of a project the job belongs to. Oar option –project.

- name: Specify an arbitrary name for the job. Oar option -n.

- additional_options: passed directly to oarsub on the command line.

- command: run by oarsub (default: sleep a long time).

oarsub¶

-

execo_g5k.oar.oarsub(job_specs, frontend_connection_params=None, timeout=False, abort_on_error=False)¶ Submit jobs.

Parameters: - job_specs – iterable of tuples (execo_g5k.oar.OarSubmission, frontend) with None for default frontend

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for submitting. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout. - abort_on_error – default False. If True, raises an exception on any error. If False, will returned the list of job got, even if incomplete (some frontends may have failed to answer).

Returns a list of tuples (oarjob id, frontend), with frontend == None for default frontend. If submission error, oarjob id == None. The returned list matches, in the same order, the job_specs parameter.

oardel¶

-

execo_g5k.oar.oardel(job_specs, frontend_connection_params=None, timeout=False)¶ Delete oar jobs.

Ignores any error, so you can delete inexistant jobs, already deleted jobs, or jobs that you don’t own. Those deletions will be ignored.

Parameters: - job_specs – iterable of tuples (job_id, frontend) with None for default frontend

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for deleting. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout.

get_current_oar_jobs¶

-

execo_g5k.oar.get_current_oar_jobs(frontends=None, start_between=None, end_between=None, frontend_connection_params=None, timeout=False, abort_on_error=False)¶ Return a list of current active oar job ids.

The list contains tuples (oarjob id, frontend).

Parameters: - frontends – an iterable of frontends to connect to. A frontend with value None means default frontend. If frontends == None, means get current oar jobs only for default frontend.

- start_between – a tuple (low, high) of endpoints. Filters and returns only jobs whose start date is in between these endpoints.

- end_between – a tuple (low, high) of endpoints. Filters and returns only jobs whose end date is in between these endpoints.

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout. - abort_on_error – default False. If True, raises an exception on any error. If False, will returned the list of job got, even if incomplete (some frontends may have failed to answer).

get_oar_job_info¶

-

execo_g5k.oar.get_oar_job_info(oar_job_id=None, frontend=None, frontend_connection_params=None, timeout=False, nolog_exit_code=False, nolog_timeout=False, nolog_error=False)¶ Return a dict with informations about an oar job.

Parameters: - oar_job_id – the oar job id. If None given, will try to get

it from

OAR_JOB_IDenvironment variable. - frontend – the frontend of the oar job. If None given, use default frontend

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout.

Hash returned may contain these keys:

start_date: unix timestamp of job’s start datewalltime: job’s walltime (seconds)scheduled_start: unix timestamp of job’s start prediction (may change between invocations)state: job state. Possible states: ‘Waiting’, ‘Hold’, ‘toLaunch’, ‘toError’, ‘toAckReservation’, ‘Launching’, ‘Running’, ‘Suspended’, ‘Resuming’, ‘Finishing’, ‘Terminated’, ‘Error’, see table jobs, column state, in oar documentation http://oar.imag.fr/sources/2.5/docs/documentation/OAR-DOCUMENTATION-ADMIN/#jobsname: job name

But no info may be available as long as the job is not scheduled.

- oar_job_id – the oar job id. If None given, will try to get

it from

wait_oar_job_start¶

-

execo_g5k.oar.wait_oar_job_start(oar_job_id=None, frontend=None, frontend_connection_params=None, timeout=None, prediction_callback=None)¶ Sleep until an oar job’s start time.

As long as the job isn’t scheduled, wait_oar_job_start will sleep / poll every

execo_g5k.config.g5k_configuration['polling_interval']seconds until it is scheduled. Then, knowing its start date, it will sleep the amount of time necessary to wait for the job start.returns True if wait was successful, False otherwise (job cancelled, error)

Parameters: - oar_job_id – the oar job id. If None given, will try to get

it from

OAR_JOB_IDenvironment variable. - frontend – the frontend of the oar job. If None given, use default frontend.

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is None (no timeout).

- prediction_callback – function taking a unix timestamp as parameter. This function will be called each time oar job start prediction changes.

- oar_job_id – the oar job id. If None given, will try to get

it from

get_oar_job_nodes¶

-

execo_g5k.oar.get_oar_job_nodes(oar_job_id=None, frontend=None, frontend_connection_params=None, timeout=False)¶ Return an iterable of

execo.host.Hostcontaining the hosts of an oar job.This method waits for the job start (the list of nodes isn’t fixed until the job start).

Parameters: - oar_job_id – the oar job id. If None given, will try to get

it from

OAR_JOB_IDenvironment variable. - frontend – the frontend of the oar job. If None given, use default frontend.

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout.

- oar_job_id – the oar job id. If None given, will try to get

it from

get_oar_job_subnets¶

-

execo_g5k.oar.get_oar_job_subnets(oar_job_id=None, frontend=None, frontend_connection_params=None, timeout=False)¶ Return a tuple containing an iterable of tuples (IP, MAC) and a dict containing the subnet parameters of the reservation (if any).

subnet parameters dict has keys: ‘ip_prefix’, ‘broadcast’, ‘netmask’, ‘gateway’, ‘network’, ‘dns_hostname’, ‘dns_ip’.

Parameters: - oar_job_id – the oar job id. If None given, will try to get

it from

OAR_JOB_IDenvironment variable. - frontend – the frontend of the oar job. If None given, use default frontend.

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout.

- oar_job_id – the oar job id. If None given, will try to get

it from

get_oar_job_kavlan¶

-

execo_g5k.oar.get_oar_job_kavlan(oar_job_id=None, frontend=None, frontend_connection_params=None, timeout=False)¶ Return the list of vlan ids of a job (if any).

Parameters: - oar_job_id – the oar job id. If None given, will try to get

it from

OAR_JOB_IDenvironment variable. - frontend – the frontend of the oar job. If None given, use default frontend.

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - timeout – timeout for retrieving. Default is False, which

means use

execo_g5k.config.g5k_configuration['default_timeout']. None means no timeout.

- oar_job_id – the oar job id. If None given, will try to get

it from

oarsubgrid¶

-

execo_g5k.oar.oarsubgrid(job_specs, reservation_date=None, walltime=None, job_type=None, queue=None, directory=None, additional_options=None, frontend_connection_params=None, timeout=False)¶ Similar to oargrid, but with parallel oar submissions.

The only difference for the user is that it returns a list of tuples (oarjob id, frontend) instead of an oargrid job id. It should run faster (since oar submission are performed in parallel instead of sequentially, and also because it bypasses the oargrid layer). All parameters reservation_date, walltime, job_type, queue, directory, additional_options from job_specs are ignored and replaced by the arguments passed. As for oargridsub, all job submissions must succeed. If at least one job submission fails, all other jobs are deleted, and it returns an empty list.

kadeploy3¶

The most user friendly way to deploy with execo is to use function

execo_g5k.kadeploy.deploy with adds a layer of “cleverness” over

kadeploy. It allows:

- deploying over several sites simultaneously

- using a test (parameter check_deployed_command: a shell command or construct) to run on the deployed hosts, to detect if the host has been deployed. The benefit is that if you call deploy at the beginning of your experiment script, then when relaunching your script while the hosts are already deployed, the deploy function will detect that they are already deployed and won’t redeploy them. The default is to use a check_deployed_command which will work with 99% of the deployed images. If needed this test can be tweaked or deactivated.

- retrying the deployment several times, each time redeploying only the hosts which fail the aforementioned test.

- for complex experiment scenarios, you can provide a callback function (parameter check_enough_func) which will be called at the end of each deployment try, given the list of correctly deployed and failed hosts, and which can decide if the deployed resources are sufficient for the experiment or not. If sufficient, no further deployment retry is done. This can be usefull for experiment needing complex and large grid topologies, where you can almost never have 100% deployment success, but still want the experiment to proceed as soon as you have sufficient resources for setting up your experiment topology.

Internally, execo_g5k.kadeploy.deploy uses class

execo_g5k.kadeploy.Kadeployer which derives from the

execo.action.Action class hierarchy, and offers no special

cleverness, but handles simultaneous deployment to multiple sites. If

needed it also allows asynchronous control of multiple deployments in

parallel, if needed.

Deployment¶

-

class

execo_g5k.kadeploy.Deployment(hosts=None, env_file=None, env_name=None, user=None, vlan=None, other_options=None)¶ A kadeploy3 deployment, POD style class.

-

env_file= None¶ filename of an environment to deploy

-

env_name= None¶ name of a kadeploy3 registered environment to deploy.

there must be either one of env_name or env_file parameter given. If none given, will try to use the default environement from

g5k_configuration.

-

hosts= None¶ hosts: iterable of

execo.host.Hoston which to deploy.

-

other_options= None¶ string of other options to pass to kadeploy3

-

user= None¶ kadeploy3 user

-

vlan= None¶ if given, kadeploy3 will automatically switch the nodes to this vlan after deployment

-

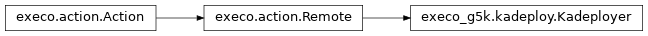

Kadeployer¶

-

class

execo_g5k.kadeploy.Kadeployer(deployment, frontend_connection_params=None, stdout_handlers=None, stderr_handlers=None)¶ Bases:

execo.action.RemoteDeploy an environment with kadeploy3 on several hosts.

Able to deploy in parallel to multiple frontends.

Parameters: - deployment – instance of Deployment class describing the intended kadeployment.

- frontend_connection_params – connection params for

connecting to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - stdout_handlers – iterable of

ProcessOutputHandlerswhich will be passed to the actual deploy processes. - stderr_handlers – iterable of

ProcessOutputHandlerswhich will be passed to the actual deploy processes.

-

deployed_hosts= None¶ Iterable of

Hostcontaining the deployed hosts. This iterable won’t be complete if the Kadeployer has not terminated.

-

deployment= None¶ Instance of Deployment class describing the intended kadeployment.

-

frontend_connection_params= None¶ Connection params for connecting to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params.

-

ok¶ Returns a boolean indicating if all processes are ok.

refer to

execo.process.ProcessBase.okfor detailed semantics of being ok for a process.

-

timeout= None¶ Deployment timeout

-

undeployed_hosts= None¶ Iterable of

Hostcontaining the hosts not deployed. This iterable won’t be complete if the Kadeployer has not terminated.

deploy¶

-

execo_g5k.kadeploy.deploy(deployment, check_deployed_command=True, node_connection_params={'user': 'root'}, num_tries=1, check_enough_func=None, frontend_connection_params=None, deploy_timeout=None, check_timeout=30, stdout_handlers=None, stderr_handlers=None)¶ Deploy nodes, many times if needed, checking which of these nodes are already deployed with a user-supplied command. If no command given for checking if nodes deployed, rely on kadeploy to know which nodes are deployed.

- loop

num_triestimes:- if

check_deployed_commandgiven, try to connect to these hosts using the suppliednode_connection_params(or the default ones), and to executecheck_deployed_command. If connection succeeds and the command returns 0, the host is assumed to be deployed, else it is assumed to be undeployed. - optionnaly call user-supplied

check_enough_func, passing to it the list of deployed and undeployed hosts, to let user code decide if enough nodes deployed. Otherwise, try as long as there are undeployed nodes. - deploy the undeployed nodes

- if

returns a tuple with the list of deployed hosts and the list of undeployed hosts.

When checking correctly deployed nodes with

check_deployed_command, and if the deployment is using the kavlan option, this function will try to contact the nodes using the appropriate DNS hostnames in the new vlan.Parameters: - deployment – instance of

execo.kadeploy.Deploymentclass describing the intended kadeployment. - check_deployed_command – command to perform remotely to

check node deployement. May be a String, True, False or None. If

String: the actual command to be used (This command should

return 0 if the node is correctly deployed, or another value

otherwise). If True, the default command value will be used

(from

execo_g5k.config.g5k_configuration). If None or False, no check is made and deployed/undeployed status will be taken from kadeploy’s output. - node_connection_params – a dict similar to

execo.config.default_connection_paramswhose values will override those inexeco.config.default_connection_paramswhen connecting to check node deployment withcheck_deployed_command(see below). - num_tries – number of deploy tries

- check_enough_func – a function taking as parameter a list of deployed hosts and a list of undeployed hosts, which will be called at each deployment iteration end, and that should return a boolean indicating if there is already enough nodes (in this case, no further deployement will be attempted).

- frontend_connection_params – connection params for connecting

to frontends if needed. Values override those in

execo_g5k.config.default_frontend_connection_params. - deploy_timeout – timeout for deployement. Default is None, which means no timeout.

- check_timeout – timeout for node deployment checks. Default is 30 seconds.

- stdout_handlers – iterable of

ProcessOutputHandlerswhich will be passed to the actual deploy processes. - stderr_handlers – iterable of

ProcessOutputHandlerswhich will be passed to the actual deploy processes.

- loop

Utilities¶

format_oar_date¶

-

execo_g5k.oar.format_oar_date(ts)¶ Return a string with the formatted date (year, month, day, hour, min, sec, ms) formatted for oar.

timezone is forced to Europe/Paris, and timezone info is discarded, for g5k oar.

Parameters: tz – a date in one of the formats handled.

format_oar_duration¶

-

execo_g5k.oar.format_oar_duration(duration)¶ Return a string with a formatted duration (hours, mins, secs, ms) formatted for oar.

Parameters: duration – a duration in one of the formats handled.

oar_date_to_unixts¶

-

execo_g5k.oar.oar_date_to_unixts(date)¶ Convert a date in the format returned by oar to an unix timestamp.

Timezone of g5k oar timestamps is Europe/Paris.

oar_duration_to_seconds¶

-

execo_g5k.oar.oar_duration_to_seconds(duration)¶ Convert a duration in the format returned by oar to a number of seconds.

get_kavlan_host_name¶

-

execo_g5k.utils.get_kavlan_host_name(host, vlanid)¶ Returns the DNS hostname of a host once switched in a kavlan.

G5kAutoPortForwarder¶

-

class

execo_g5k.utils.G5kAutoPortForwarder(site, host, port)¶ Context manager for automatically opening a port forwarder if outside grid5000

Parameters: - site – the grid5000 site to connect to

- host – the host to connect to in the site

- port – the port to connect to on the host

It automatically decides if a port forwarding is needed, depending if run from inside or outside grid5000.

When entering the context, it returns a tuple actual host, actual port to connect to. It is the real host/port if inside grid5000, or the forwarded port if outside. The forwarded port, if any, is guaranteed to be operational.

When leaving the context, it kills the port forwarder background process.

Kaconsole¶

Kaconsole¶

-

class

execo_g5k.kadeploy.KaconsoleProcess(host, frontend_connection_params=None, connection_timeout=20, prompt='root@[^#]*#', **kwargs)¶ Bases:

execo.process.SshProcessA connection to the console of a Grid5000 through kaconsole3.

Unlike other process classes, this class, once started, leaves the connection opened, allowing to send commands (and expect outputs) through the pipe.

Note that only one console can be simultaneously opened at one time to a node

Grid5000 API utilities¶

Execo_g5k provides easy-to-use functions to interacts with the Grid5000 API. All static parts of the API is stored locally, in $HOME/.execo/g5k_api_cache, which version is checked on the first call to a method. Beware that if API is not reachable at runtime, the local cache will be used.

Functions for wrapping the grid5000 REST API. This module also manage a cache of the Grid‘5000 Reference API (hosts and network equipments) (Data are stored in $HOME/.execo/g5k_api_cache/’ under pickle format)

All queries to the Grid5000 REST API are done with or without

credentials, depending on key api_username of

execo_g5k.config.g5k_configuration. If credentials are used, the

password is interactively asked and if the keyring module is

available, the password will then be stored in the keyring through

this module and will not be asked in subsequent executions. (the

keyring python module allows to access the system keyring services

like gnome-keyring or kwallet. If a password needs to be changed, do

it from the keyring GUI).

This module is thread-safe.

APIConnection¶

-

class

execo_g5k.api_utils.APIConnection(base_uri=None, username=None, password=None, headers=None, additional_args=None, timeout=30)¶ Basic class for easily getting url contents.

Intended to be used to get content from restfull apis, particularly the grid5000 api.

Parameters: - base_uri – server base uri. defaults to

g5k_configuration.get('api_uri') - username – username for the http connection. If None

(default), use default from

g5k_configuration.get('api_username'). If False, don’t use a username at all. - password – password for the http connection. If None (default), get the password from a keyring (if available) or interactively.

- headers – http headers to use. If None (default), default headers accepting json answer will be used.

- additional_args – a dict of optional arguments (string to string mappings) to pass at the end of the url of all http requests.

- timeout – timeout for the http connection.

-

get(relative_uri)¶ Get the (response, content) tuple for the given path on the server

-

post(relative_uri, json)¶ Submit the body to a given path on the server, returns the (response, content) tuple

- base_uri – server base uri. defaults to

Sites¶

-

execo_g5k.api_utils.get_g5k_sites()¶ Get the list of Grid5000 sites. Returns an iterable.

-

execo_g5k.api_utils.get_site_attributes(site)¶ Get the attributes of a site (as known to the g5k api) as a dict

-

execo_g5k.api_utils.get_site_clusters(site, queues='default')¶ Get the list of clusters from a site. Returns an iterable.

Parameters: - site – site name

- queues – queues filter, see

execo_g5k.api_utils.filter_clusters

-

execo_g5k.api_utils.get_site_hosts(site, queues='default')¶ Get the list of hosts from a site. Returns an iterable.

Parameters: - site – site name

- queues – queues filter, see

execo_g5k.api_utils.filter_clusters

-

execo_g5k.api_utils.get_site_network_equipments(site)¶ Get the list of network elements from a site. Returns an iterable.

Clusters¶

-

execo_g5k.api_utils.get_g5k_clusters(queues='default')¶ Get the list of all g5k clusters. Returns an iterable.

Parameters: queues – queues filter, see execo_g5k.api_utils.filter_clusters

-

execo_g5k.api_utils.get_cluster_attributes(cluster)¶ Get the attributes of a cluster (as known to the g5k api) as a dict

-

execo_g5k.api_utils.get_cluster_hosts(cluster)¶ Get the list of hosts from a cluster. Returns an iterable.

-

execo_g5k.api_utils.get_cluster_site(cluster)¶ Get the site of a cluster.

-

execo_g5k.api_utils.get_cluster_network_equipments(cluster)¶ Get the list of the network equipments used by a cluster

Hosts¶

-

execo_g5k.api_utils.get_g5k_hosts(queues='default')¶ Get the list of all g5k hosts. Returns an iterable.

Parameters: queues – queues filter, see execo_g5k.api_utils.filter_clusters

-

execo_g5k.api_utils.get_host_attributes(host)¶ Get the attributes of a host (as known to the g5k api) as a dict

-

execo_g5k.api_utils.get_host_site(host)¶ Get the site of a host.

Works both with a bare hostname or a fqdn.

-

execo_g5k.api_utils.get_host_cluster(host)¶ Get the cluster of a host.

Works both with a bare hostname or a fqdn.

-

execo_g5k.api_utils.get_host_network_equipments(host)¶

Network equipments¶

-

execo_g5k.api_utils.get_network_equipment_attributes(equip)¶ Get the attributes of a network equipment of a site as a dict

-

execo_g5k.api_utils.get_network_equipment_site(equip)¶ Return the site of a network_equipment

Other¶

-

execo_g5k.api_utils.get_resource_attributes(path)¶ Get generic resource (path on g5k api) attributes as a dict

-

execo_g5k.api_utils.group_hosts(hosts)¶ Given a sequence of hosts, group them in a dict by sites and clusters

-

execo_g5k.api_utils.canonical_host_name(host)¶ Convert, if needed, the host name to its canonical form without interface part, kavlan part, ipv6 part.

Can be given a Host, will return a Host. Can be given a string, will return a string. Works with short or fqdn forms of hostnames.

-

execo_g5k.api_utils.filter_clusters(clusters, queues='default')¶ Filter a list of clusters on their queue(s).

Given a list of clusters, return the list filtered, keeping only clusters that have at least one oar queue matching one in the list of filter queues passed in parameters. The cluster queues are taken from the queues attributes in the grid5000 API. If this attribute is missing, the cluster is considered to be in queues [“admin”, “default”, “besteffort”]

Parameters: - clusters – list of clusters

- queues – a queue name or a list of queues. clusters will be kept in the returned filtered list only if at least one of their queues matches one queue of this parameter. If queues = None or False, no filtering at all is done. By default, keeps clusters in queue “default”.

-

execo_g5k.api_utils.get_hosts_metric(hosts, metric, from_ts=None, to_ts=None, resolution=None)¶ Get metric values from Grid‘5000 metrology API

Parameters: - hosts – List of hosts

- metric – Grid‘5000 metrology metric to fetch (eg: “power”, “cpu_user”)

- from_ts – Time from which metric is collected, in any type

supported by

execo.time_utils.get_unixts, optional. - to_ts – Time until which metric is collected, in any type

supported by

execo.time_utils.get_unixts, optional. - resolution – time resolution, in any type supported by

execo.time_utils.get_seconds, optional.

Returns: A dict of host -> dict with entries ‘from’ (unix timestamp in seconds, as returned from g5k api), ‘to’ (unix timestamp in seconds, as returned from g5k api), ‘resolution’ (in seconds, as returned from g5k api), type (the type of metric, as returned by g5k api), ‘values’: a list of tuples (timestamp, metric value). Some g5k metrics (the kwapi ones) return both the timestamps and values as separate lists, in which case this function only takes care of gathering them in tuples (note also that for these metrics, it seems that ‘from’, ‘to’, ‘resolution’ returned by g5k api are inconsistent with the timestamps list. In this case this function makes no correction and returns everything ‘as is’). Some other g5k metrics (the ganglia ones) only return the values, in which case this function generates the timestamps of the tuples from ‘from’, ‘to’, ‘resolution’.

Planning utilities¶

Module provides functions to help you to plan your experiment on Grid‘5000.

Retrieve resources planning¶

These functions allow to retrieve retrieve resources planning, compute the slots and find slots with specific properties.

-

execo_g5k.planning.get_planning(elements=['grid5000'], vlan=False, subnet=False, storage=False, out_of_chart=False, starttime=None, endtime=None, ignore_besteffort=True, queues='default')¶ Retrieve the planning of the elements (site, cluster) and others resources. Element planning structure is

{'busy': [(123456,123457), ... ], 'free': [(123457,123460), ... ]}.Parameters: - elements – a list of Grid‘5000 elements (‘grid5000’, <site>, <cluster>)

- vlan – a boolean to ask for KaVLAN computation

- subnet – a boolean to ask for subnets computation

- storage – a boolean to ask for sorage computation

- out_of_chart – if True, consider that days outside weekends are busy

- starttime – start of time period for which to compute the planning, defaults to now + 1 minute

- endtime – end of time period for which to compute the planning, defaults to 4 weeks from now

- ignore_besteffort – True by default, to consider the resources with besteffort jobs as available

- queues – list of oar queues for which to get the planning

Return a dict whose keys are sites, whose values are dict whose keys are cluster, subnets, kavlan or storage, whose values are planning dicts, whose keys are hosts, subnet address range, vlan number or chunk id planning respectively.

-

execo_g5k.planning.compute_slots(planning, walltime, excluded_elements=None, include_hosts=False)¶ Compute the slots limits and find the number of available nodes for each elements and for the given walltime.

Return the list of slots where a slot is

[ start, stop, freehosts ]and freehosts is a dict of Grid‘5000 element with number of nodes available{'grid5000': 40, 'lyon': 20, 'reims': 10, 'stremi': 10 }.WARNING: slots does not includes subnets

Parameters: - planning – a dict of the resources planning, returned by

get_planning - walltime – a duration in a format supported by get_seconds where the resources are available

- excluded_elements – list of elements that will not be included in the slots computation

- planning – a dict of the resources planning, returned by

-

execo_g5k.planning.find_first_slot(slots, resources_wanted)¶ Return the first slot (a tuple start date, end date, resources) where some resources are available

Parameters: - slots – list of slots returned by

compute_slots - resources_wanted – a dict of elements that must have some free hosts

- slots – list of slots returned by

-

execo_g5k.planning.find_max_slot(slots, resources_wanted)¶ Return the slot (a tuple start date, end date, resources) with the maximum nodes available for the given elements

Parameters: - slots – list of slots returned by

compute_slots - resources_wanted – a dict of elements that must be maximized

- slots – list of slots returned by

-

execo_g5k.planning.find_free_slot(slots, resources_wanted)¶ Return the first slot (a tuple start date, end date, resources) with enough resources

Parameters: - slots – list of slots returned by

compute_slots - resources_wanted – a dict describing the wanted ressources

{'grid5000': 50, 'lyon': 20, 'stremi': 10 }

- slots – list of slots returned by

Jobs generation tools¶

-

execo_g5k.planning.distribute_hosts(resources_available, resources_wanted, excluded_elements=None, ratio=None)¶ Distribute the resources on the different sites and cluster

Parameters: - resources_available – a dict defining the resources available

- resources_wanted – a dict defining the resources available you really want

- excluded_elements – a list of elements that won’t be used

- ratio – if not None (the default), a float between 0 and 1, to actually only use a fraction of the resources.

-

execo_g5k.planning.get_jobs_specs(resources, excluded_elements=None, name=None)¶ Generate the several job specifications from the dict of resources and the blacklisted elements

Parameters: - resources – a dict, whose keys are Grid‘5000 element and values the corresponding number of n_nodes

- excluded_elements – a list of elements that won’t be used

- name – the name of the jobs that will be given

Plot functions¶

-

execo_g5k.planning.draw_gantt(planning, colors=None, show=False, save=True, outfile=None)¶ Draw the hosts planning for the elements you ask (requires Matplotlib)

Parameters: - planning – the dict of elements planning

- colors – a dict to define element coloring

{'element': (255., 122., 122.)} - show – display the Gantt diagram

- save – save the Gantt diagram to outfile

- outfile – specify the output file

-

execo_g5k.planning.draw_slots(slots, colors=None, show=False, save=True, outfile=None)¶ Draw the number of nodes available for the clusters (requires Matplotlib >= 1.2.0)

Parameters: - slots – a list of slot, as returned by

compute_slots - colors – a dict to define element coloring

{'element': (255., 122., 122.)} - show – display the slots versus time

- save – save the plot to outfile

- outfile – specify the output file

- slots – a list of slot, as returned by

Grid5000 Charter¶

g5k_charter_remaining¶

g5k_charter_time¶

-

execo_g5k.charter.g5k_charter_time(t)¶ Is the given date in a g5k charter time period ?

Returns a boolean, True if the given date is in a period where the g5k charter needs to be respected, False if it is in a period where charter is not applicable (night, weekends, non working days)

Parameters: t – a date in a type supported by execo.time_utils.get_unixts

get_next_charter_period¶

-

execo_g5k.charter.get_next_charter_period(start, end)¶ Return the next g5k charter time period.

Parameters: - start – timestamp in a type supported by

execo.time_utils.get_unixtsfrom which to start searching for the next g5k charter time period. If start is in a g5k charter time period, the returned g5k charter time period starts at start. - end – timestamp in a type supported by

execo.time_utils.get_unixtsuntil which to search for the next g5k charter time period. If end is in the g5k charter time period, the returned g5k charter time period ends at end.

Returns: a tuple (charter_start, charter_end) of unix timestamps. (None, None) if no g5k charter time period found

- start – timestamp in a type supported by

Grid5000 Topology¶

A module based on networkx to create a topological graph of the Grid‘5000 platform. “Nodes” are used to represent elements (compute nodes, switch, router, renater) and “Edges” are the network links. Nodes has a kind data (+ power and core for compute nodes) whereas edges has bandwidth and latency information.

All information comes from the Grid‘5000 reference API.

-

class

execo_g5k.topology.g5k_graph(elements=None)¶ Main graph representing the topology of the Grid‘5000 platform. All nodes elements are defined with their FQDN

Create the

MultiGraph()representing Grid‘5000 network topologyParameters: sites – add the topology of the given site(s) -

add_backbone()¶ Add the nodes corresponding to Renater equipments

-

add_cluster(cluster, data=None)¶ Add the cluster to the graph

-

add_equip(equip, site)¶ Add a network equipment

-

add_host(host, data=None)¶ Add a host in the graph

Parameters: - host – a string corresponding to the node name

- data – a dict containing the Grid‘5000 host attributes

-

add_site(site, data=None)¶ Add a site to the graph

-

get_backbone()¶ Return

-

get_clusters(site=None)¶ Return the list of clusters

-

get_equip_hosts(equip)¶ Return the nodes which are connected to the equipment

-

get_host_adapters(host)¶ Return the mountable network interfaces from a host

-

get_host_neighbours(host)¶ Return the compute nodes that are connected to the same switch, router or linecard

-

get_hosts(cluster=None, site=None)¶ Return the list of nodes corresponding to hosts

-

get_site_router(site)¶ Return the node corresponding to the router of a site

-

get_sites()¶ Return the list of sites

-

rm_backbone()¶ Remove all elements from the backbone

-

rm_cluster(cluster)¶ Remove the cluster from the graph

-

rm_equip(equip)¶ Remove an equipment from the node

-

rm_host(host)¶ Remove the host from the graph

-

rm_site(site)¶ Remove the site from the graph

-

-

execo_g5k.topology.treemap(gr, nodes_legend=None, edges_legend=None, nodes_labels=None, layout='neato', compact=False)¶ Create a treemap of the topology and return a matplotlib figure

Parameters: - nodes_legend – a dict of dicts containing the parameter used to draw the nodes, such as ‘myelement’: {‘color’: ‘#9CF7BC’, ‘shape’: ‘p’, ‘size’: 200}

- edges_legend – a dict of dicts containing the parameter used to draw the edges, such as bandwidth: {‘width’: 0.2, ‘color’: ‘#666666’}

- nodes_labels – a dict of dicts containing the font parameters for the labels, such as ‘myelement ‘: {‘nodes’: {}, ‘font_size’: 8, ‘font_weight’: ‘bold’, ‘str_func’: lambda n: n.split(‘.’)[1].title()}

- layout – the graphviz tool to be used to compute node position

- compact – represent only on node for a cluster/cabinet

WARNING: This function use matplotlib.figure that by default requires a DISPLAY. If you want use this on a headless host, you need to change the matplotlib backend before to import execo_g5k.topology module.

Configuration¶

This module may be configured at import time by defining two dicts

g5k_configuration and default_frontend_connection_params in the

file ~/.execo.conf.py

The g5k_configuration dict contains global g5k configuration

parameters.

-

execo_g5k.config.g5k_configuration= {'api_additional_args': {}, 'api_timeout': 30, 'api_uri': 'https://api.grid5000.fr/3.0/', 'api_username': None, 'api_verify_ssl_cert': True, 'check_deployed_command': "! (mount | grep -E '^/dev/[[:alpha:]]+2 on / ')", 'default_env_file': None, 'default_env_name': None, 'default_frontend': None, 'default_timeout': 900, 'kadeploy3': 'kadeploy3', 'kadeploy3_options': '-k -d', 'no_ssh_for_local_frontend': False, 'oar_job_key_file': None, 'oar_pgsql_ro_db': 'oar2', 'oar_pgsql_ro_password': 'read', 'oar_pgsql_ro_port': 5432, 'oar_pgsql_ro_user': 'oarreader', 'polling_interval': 20, 'tiny_polling_interval': 10}¶ Global Grid5000 configuration parameters.

kadeploy3: kadeploy3 command.kadeploy3_options: a string with kadeploy3 command line options.default_env_name: a default environment name to use for deployments (as registered to kadeploy3).default_env_file: a default environment file to use for deployments (for kadeploy3).default_timeout: default timeout for all calls to g5k services (except deployments).check_deployed_command: default shell command used byexeco_g5k.kadeploy.deployto check that the nodes are correctly deployed. This command should return 0 if the node is correctly deployed, or another value otherwise. The default checks that the root is not on the second partition of the disk.no_ssh_for_local_frontend: if True, don’t use ssh to issue g5k commands for local site. If False, always use ssh, both for remote frontends and local site. Set it to True if you are sure that your scripts always run on the local frontend.polling_interval: time interval between pollings for various operations, eg. wait oar job start.tiny_polling_interval: small time interval between pollings for various operations. Used for example when waiting for a job start, and start date of the job is over but the job is not yet in running state.default_frontend: address of default frontend.api_uri: base uri for g5k api serverr.api_username: username to use for requests to the Grid5000 REST API. If None, no credentials will be used, which is fine when running on a Grid5000 frontend.api_additional_args: additional arguments to append at the end all requests to g5k api. May be used to request the testing branch (use: api_additional_args = {‘branch’: ‘testing’})api_timeout: timeout in seconds of all api requests, before raising an exception.api_verify_ssl_cert: If set to false, will disable ssl certificates check for api https requestsoar_job_key_file: ssh key to use for oar. If defined, takes precedence over environment variable OAR_JOB_KEY_FILE.

Its default values are:

g5k_configuration = {

'kadeploy3': 'kadeploy3',

'kadeploy3_options': '-k -d',

'default_env_name': None,

'default_env_file': None,

'default_timeout': 900,

'check_deployed_command': "! (mount | grep -E '^/dev/[[:alpha:]]+2 on / ')",

'no_ssh_for_local_frontend' : False,

'polling_interval' : 20,

'tiny_polling_interval' : 10,

'default_frontend' : None,

'api_uri': "https://api.grid5000.fr/3.0/",

'api_username': None,

'api_additional_args': {},

'api_timeout': 30,

'api_verify_ssl_cert': True,

'oar_job_key_file': None,

'oar_pgsql_ro_db': 'oar2',

'oar_pgsql_ro_user': 'oarreader',

'oar_pgsql_ro_password': 'read',

'oar_pgsql_ro_port': 5432,

}

The default_frontend_connection_params dict contains default

parameters for remote connections to grid5000 frontends.

-

execo_g5k.config.default_frontend_connection_params= {'host_rewrite_func': <function make_default_frontend_connection_params.<locals>.<lambda>>, 'pty': True}¶ Default connection params when connecting to a Grid5000 frontend.

Its default values are:

default_frontend_connection_params = {

'pty': True,

'host_rewrite_func': lambda host: host + ".grid5000.fr"

}

in default_frontend_connection_params, the host_rewrite_func

configuration variable is set to automatically map a site name to its

corresponding frontend, so that all commands are run on the proper

frontends.

default_oarsh_oarcp_params contains default connection parameters

suitable to connect to grid5000 nodes with oarsh / oarcp.

-

execo_g5k.config.default_oarsh_oarcp_params= {'pty': True, 'scp': 'oarcp', 'ssh': 'oarsh', 'taktuk_connector': 'oarsh'}¶ A convenient, predefined connection paramaters dict with oarsh / oarcp configuration.

See

execo.config.make_default_connection_params

Its default values are:

default_oarsh_oarcp_params = {

'ssh': 'oarsh',

'scp': 'oarcp',

'taktuk_connector': 'oarsh',

'pty': True,

}

OAR keys¶

Oar by default generate job specific ssh keys. So by default, one has

to retrieve these keys and explicitely use them for connecting to the

jobs, which is painfull. Another possibility is to tell oar to use

specific keys. Oar can automatically use the key pointed to by the

environement variable OAR_JOB_KEY_FILE if it is defined. So the

most convenient way to use execo/oar, is to set OAR_JOB_KEY_FILE

in your ~/.profile to point to your internal Grid5000 ssh key and

export this environment variable, or use the oar_job_key_file in

execo_g5k.config.g5k_configuration.

Running from another host than a frontend¶

Note that when running a script from another host than a frontend

(from a node or from your laptop outside Grid5000), everything will

work except oarsh / oarcp connections, since these executables

only exist on frontends. In this case you can still connect by using

ssh / scp as user oar on port 6667 (ie. use

connection_params = {'user': 'oar', 'port': 6667}). This is the

port where an oar-specific ssh server is listening. This server will

then change user from oar to the user currently owning the node.

Running from outside Grid5000¶

Execo scripts can be run from outside grid5000 with a subtle configuration of both execo and ssh.

First, in ~/.ssh/config, declare aliases for g5k connection

(through the access machine). For example, here is an alias g5k

for connecting through the lyon access:

Host *.g5k g5k

ProxyCommand ssh access.grid5000.fr "nc -q 0 `echo %h | sed -ne 's/\.g5k$//p;s/^g5k$/lyon/p'` %p"

Then in ~/.execo.conf.py put this code:

import re

default_connection_params = {

'host_rewrite_func': lambda host: re.sub(r'\.grid5000\.fr$', '.g5k', host),

'taktuk_gateway': 'g5k'

}

default_frontend_connection_params = {

'host_rewrite_func': lambda host: host + ".g5k"

}

g5k_configuration = {

'api_username': '<g5k_username>',

}

Now, every time execo tries to connect to a host, the host name is rewritten as to be reached through the Grid5000 ssh proxy connection alias, and the same for the frontends.

The perfect grid5000 connection configuration¶

- use separate ssh keys for connecting from outside to grid5000 and

inside grid5000:

- use your regular ssh key for connecting from outside grid5000 to

inside grid5000 by adding your regular public key to

~/.ssh/authorized_keyson each site’s nfs. - generate (with

ssh-keygen)a specific grid5000 private/public key pair, without passphrase, for navigating inside grid5000. replicate~/.ssh/id_dsaand~/.ssh/id_dsa.pub(for a dsa key pair, or the equivalent rsa keys for an rsa key pair) to~/.ssh/on each site’s nfs and add~/.ssh/id_dsa.pubto~/.ssh/authorized_keyson each site’s nfs.

- use your regular ssh key for connecting from outside grid5000 to

inside grid5000 by adding your regular public key to

- add

export OAR_JOB_KEY_FILE=~/.ssh/id_dsa(or id_rsa) to each site’s~/.bash_profile - Connections should then work directly with oarsh/oarcp if you use

execo_g5k.config.default_oarsh_oarcp_paramsconnection parameters. Connections should also work directly with ssh for nodes reserved with theallow_classic_sshoption. Finally, for deployed nodes, connections should work directly because option-kis passed to kadeploy3 by default.

TODO: Currently, due to an ongoing bug or misconfiguration (see https://www.grid5000.fr/cgi-bin/bugzilla3/show_bug.cgi?id=3302), oar fails to access the ssh keys if they are not world-readable, so you need to make them so.

Note also that configuring default_oarsh_oarcp_params to bypass oarsh/oarcp and directly connect to port 6667 will save you from many problems such as high number of open pty as well as impossibility to kill oarsh / oarcp processes (due to it running sudoed):

default_oarsh_oarcp_params = {

'user': "oar",

'keyfile': "path/to/ssh/key/used/for/oar",

'port': 6667,

'ssh': 'ssh',

'scp': 'scp',

'taktuk_connector': 'ssh',

}